- This event has passed.

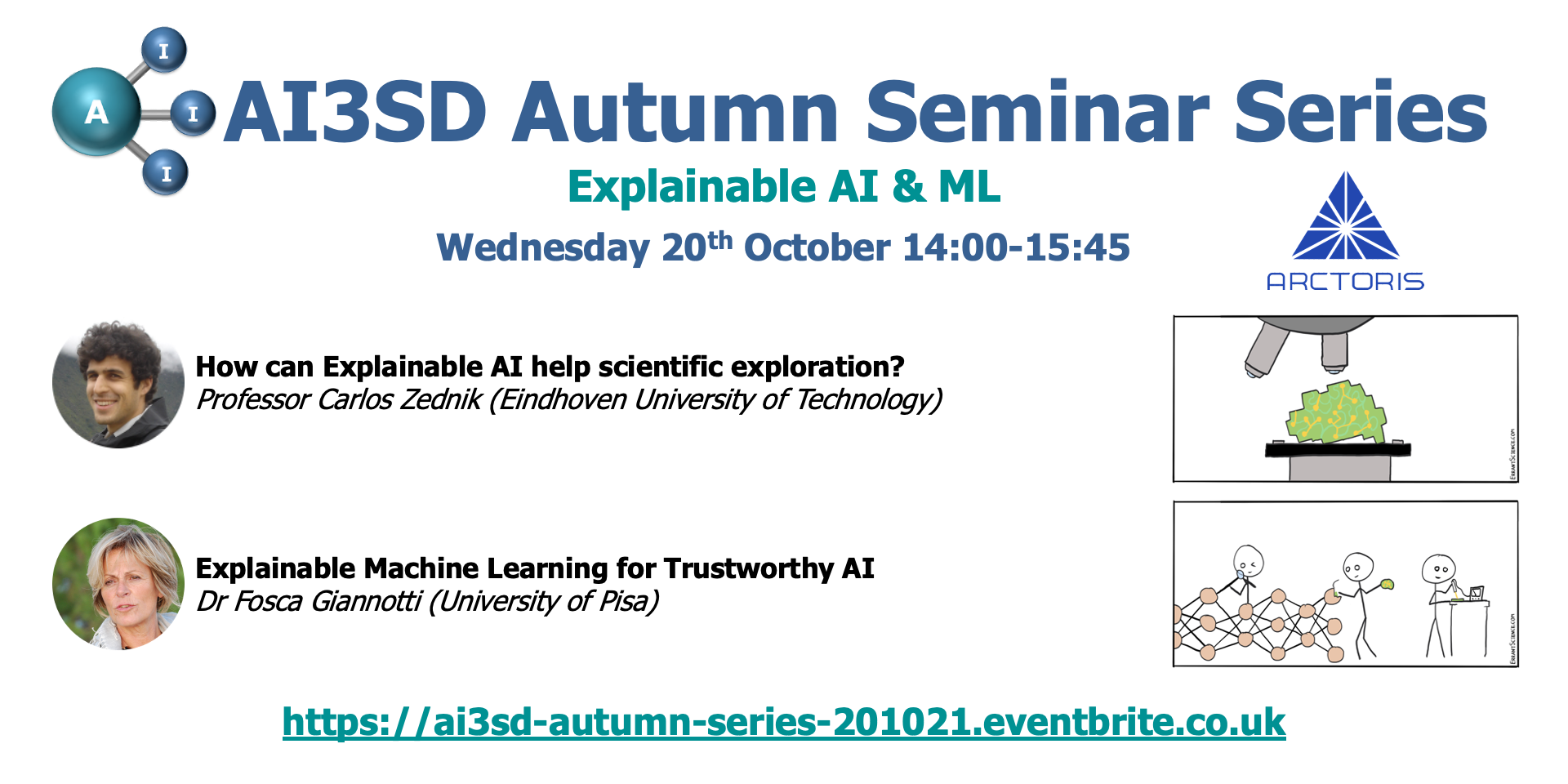

20/10/2021 – AI3SD Autumn Seminar II: Explainable AI & ML

20th October 2021 @ 2:00 pm - 3:45 pm

Free

Eventbrite Link: https://ai3sd-autumn-series-201021.eventbrite.co.uk

Description:

This seminar forms part of the AI3SD Online Seminar Series that will run across the autumn (from October 2021 to December 2021). This seminar will be run via zoom, when you register on Eventbrite you will receive a zoom registration email alongside your standard Eventbrite registration email. Where speakers have given permission to be recorded, their talks will be made available on our AI3SD YouTube Channel. The theme for this seminar is Explainable AI & ML.

Agenda

- 14:00-14:45: How can Explainable AI help scientific exploration? – Professor Carlos Zednik (Eindhoven University of Technology)

- 14:45-15:00: Coffee Break

- 15:00-15:45: Explainable Machine Learning for Trustworthy AI – Dr Fosca Giannotti (University of Pisa)

Abstracts & Speaker Bios

- How can Explainable AI help scientific exploration? – Professor Carlos Zednik: Although models developed using machine learning are increasingly prevalent in scientific research, their opacity poses a threat to their utility. Explainable AI (XAI) aims to diminish this threat by rendering opaque models transparent. But, XAI is more than just the solution to a problem–it can also play an invaluable role in scientific exploration. In this talk, I will consider different techniques from Explainable AI to demonstrate their potential contribution to different kinds of exploratory activities. In particular, I argue that XAI tools can be used (1) to better understand what a “big data” model is a model of, (2) to engage in causal inference over high-dimensional nonlinear systems, and (3) to generate algorithmic-level hypotheses in cognitive science and neuroscience.

Bio: My research centers on the explanation of natural and artificial cognitive systems. Many of my articles specify norms and best-practice methods for cognitive psychology, neuroscience, and explainable AI. Others develop philosophical concepts and arguments with which to better understand scientific and engineering practice. I am the PI of the DFG-funded project on Generalizability and Simplicity of Mechanistic Explanations in Neuroscience. In addition to my regular research and teaching, I do consulting work on the methodological, normative, and ethical constraints on artificial intelligence, my primary expertise being transparency in machine learning. In this context I have an ongoing relationship with the research team at neurocat GmbH, and have contributed to AI standardization efforts at the German Institute for Standardization (DIN). Before arriving in Eindhoven I was based at the Philosophy-Neuroscience-Cognition program at the University of Magdeburg, and prior to that, at the Institute of Cognitive Science at the University of Osnabrück. I received my PhD from the Indiana University Cognitive Science Program, after receiving a Master’s degree in Philosophy of Mind from the University of Warwick and a Bachelor’s degree in Computer Science and Philosophy from Cornell University. You can find out more about me on Google Scholar, PhilPapers, Publons, and Twitter.

- Explainable Machine Learning for Trustworthy AI – Dr Fosca Giannotti: Black box AI systems for automated decision making, often based on machine learning over (big) data, map a user’s features into a class or a score without exposing the reasons why. This is problematic not only for the lack of transparency, but also for possible biases inherited by the algorithms from human prejudices and collection artifacts hidden in the training data, which may lead to unfair or wrong decisions. The future of AI lies in enabling people to collaborate with machines to solve complex problems. Like any efficient collaboration, this requires good communication, trust, clarity and understanding. Explainable AI addresses such challenges and for years different AI communities have studied such topic, leading to different definitions, evaluation protocols, motivations, and results. This lecture provides a reasoned introduction to the work of Explainable AI (XAI) to date, and surveys the literature with a focus on machine learning and symbolic AI related approaches. We motivate the needs of XAI in real-world and large-scale application, while presenting state-of-the-art techniques and best practices, as well as discussing the many open challenges.

Bio: Fosca Giannotti is a director of research of computer science at the Information Science and Technology Institute “A. Faedo” of the National Research Council, Pisa, Italy. Fosca Giannotti is a pioneering scientist in mobility data mining, social network analysis and privacy-preserving data mining. Fosca leads the Pisa KDD Lab – Knowledge Discovery and Data Mining Laboratory http://kdd.isti.cnr.it, a joint research initiative of the University of Pisa and ISTI-CNR, founded in 1994 as one of the earliest research lab centered on data mining. Fosca’s research focus is on social mining from big data: smart cities, human dynamics, social and economic networks, ethics and trust, diffusion of innovations. She has coordinated tens of European projects and industrial collaborations. Fosca is currently the coordinator of SoBigData, the European research infrastructure on Big Data Analytics and Social Mining http://www.sobigdata.eu, an ecosystem of ten cutting edge European research centres providing an open platform for interdisciplinary data science and data-driven innovation. Recently she is the PI of ERC Advanced Grant entitled XAI – Science and technology for the explanation of AI decision making. She is member of the steering board of CINI-AIIS lab. On March 8, 2019 she has been features as one of the 19 Inspiring women in AI, BigData, Data Science, Machine Learning by KDnuggets.com, the leading site on AI, Data Mining and Machine Learning https://www.kdnuggets.com/2019/03/women-ai-big-data-science-machine-learning.html.