This event was the first of the AI3SD Autumn Seminar Series that will run from October 2021 to December 2021. This seminar was hosted online via a zoom webinar and the theme for this seminar was Linked Data, Ontologies & Deep Learning, and consisted of two talks on the subject. Below are the videos of the talks, with speaker biographies and Q & A transcript. The full playlist of this seminar can be found here.

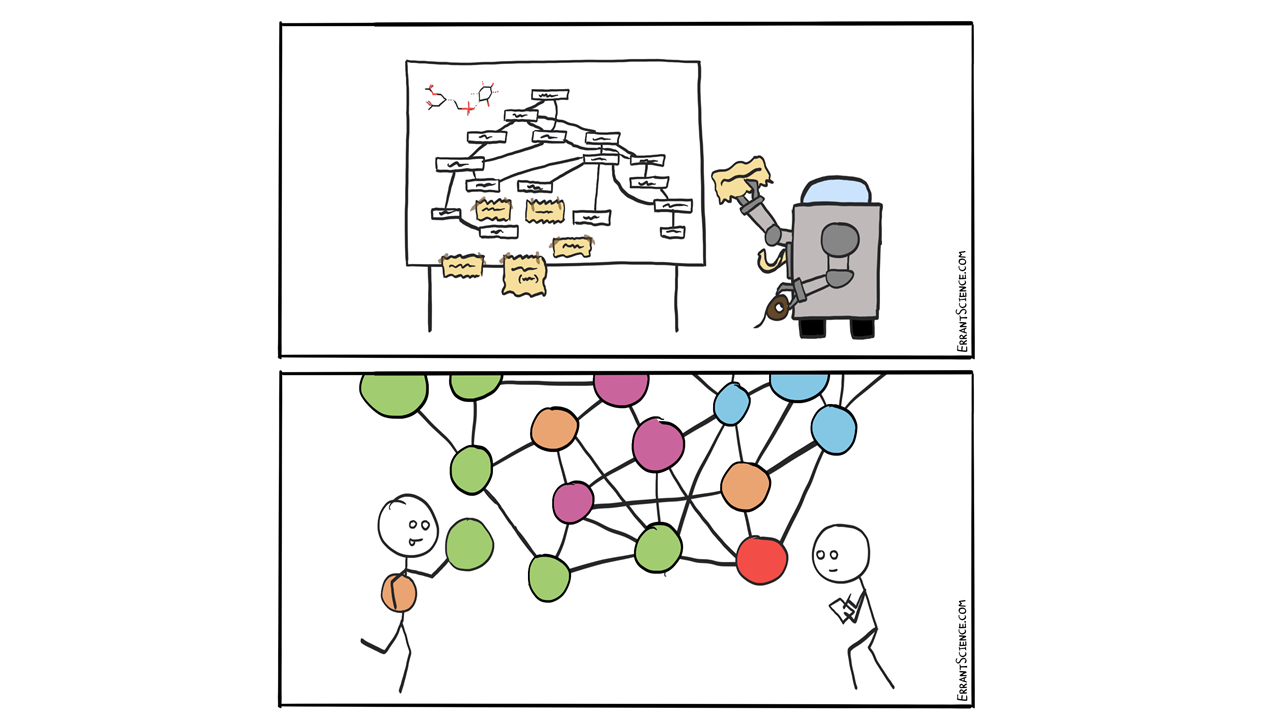

Automated Chemical Ontology Expansion using Deep Learning – Dr Janna Hastings

I am a computer scientist interested in developing artificial intelligence-based computational systems to support research across the biological and social sciences. I am particularly interested in the interface between data science, i.e. algorithms for deriving inferences and predictions based on structured and unstructured data, and knowledge science, i.e. research that amasses, integrates and harnesses what we already know and channels that back towards efforts to make novel discoveries, towards a genuinely cumulative discovery frontier. To this end I have actively contributed to research in computational knowledge representation and reasoning, to community-wide knowledge integration via building semantic standards, and to scientific discovery research using computational approaches across a range of domains.

Q & A

Q1: When did you first start getting interested in using semantic web technologies and working with ontologies? And what sparked that interest?

I was hired many years ago by the EBI, the European Bioinformatics Institute, to work on ChEBI and before that I didn’t really know about ontologies, so I was put in the deep end. I did study computer science and did study logic-based knowledge representation and in those days we didn’t have OWL yet, so now we have OWL and all kind of different reasoners and all sorts of interesting things that we can do.

Q2: How robust are the machine learning based predictions for small changes in the class predictions?

If you have even a small change in the input structure, that might mean that it should be predicted to belong to a different class. Even small changes may have a big semantic impact on your classification. So, you would want it to be sensitive to the differences that matter and not sensitive to the differences that don’t matter. That’s the nuance of evaluation, so in order to do the evaluation, we used an unbiased sample of classes from ChEBI and took a test and training data split from the class selection in a totally randomized way, and we hope that therefore what we see in terms of performances, tracks really chemically interesting performance in an unbiased way.

Q3: Where can we find the code of the project?

I do not have the link right in front of me, but if you go to the paper you will find the GitHub link.

Q4: What kind of ontologies can this approach not be used for. For example, how dependent is it on a computer representation of the things that are to be categorized?

So, what this approach depends on is that there is some information annotated to the members that will be predictive of the class structure. So, it will work for types of ontology where you have a class structure that tracks something structural. In chemistry it’s obvious what the structure is, it would work for proteins, and sequence classifications too. It would probably work for pathways which have some kind of structural information. It may work for text-type ontologies where the text where the entities are well described in some sort of text segment. But there are obviously lots of ontologies for which there’s no annotation information that you can use for learning, and it won’t work for those.

Q5: So, BioAssay’s would be tricky then?

Probably, unless you have descriptions in text which have the information.

Q6: So I’m guessing this hasn’t been tried with the BioAssay Ontology?

No

Q7: How is the performance of classification specifically for natural product compounds?

So, we didn’t look at that as a different sub-group. ChEBI obviously has natural products as classes as well as less natural product classes. There is another work that I’m aware of, I’ve forgotten the authors name, but it’s called NP classifier. And they are using deep learning to predict natural product classes specifically, so they don’t look in the general case and they just look at list of a few hundred natural product classes and use a similar kind of deep learning approach there. And they found that their approach was working quite well, so I think you should start by looking at that. Search for “NP classifier”, I think you’ll find the paper that way.

Q8: Is the output of the learning task the association of chemical compounds to a single class, or multiple classes, or other structures of ChEBI? What else, other than class identification, are human curators curating?

The output of the learning task is associating of a compound to multiple classes. The human curators are checking all kinds of things and assembling all kinds of information. For example, they check the names of the chemicals for correctness, and they assemble things like trivial names, which you can’t predict automatically. And, basically, this additional information, this particular learning task doesn’t give you.

Q9: You mentioned the representation used for the classical machine learning training limited the prediction ability for certain descriptors. Which representation was used and was there a reason for this choice?

For the classical approaches we use the standard fingerprint from the RD Kit which is a chemical informatics library in Python and the reason for that choice was, that was the most straightforward to get a straightforward representation that could be used for the learning task. And in fact, there are other fingerprints even within the RD Kit, which would potentially contain more information, they are a little bit slower to compute, and this would potentially address the limitation for those classes like salts, which then lacked information to make predictions. So, finding the best fingerprint for the learning was a “we didn’t try”, we just use the simplest, quickest one.

Q10: Do you think that rule-based predictions (classifier) can be more robust?

There’s no question that the rule-based prediction, with a rule usually if your antecedent is true, your consequent is true. So, you are sort of staying in territory where you are on firm ground and for sure you have some confidence, whereas with a learning approach it’s a kind of statistical association that you’re learning and therefore you can get really wrong predictions. But with the rule-based approach, for the most part, although you might not get wrong predictions, your predictions might be too general to be useful. So, getting the best prediction with a rule-based approach may involve hugely complex and perhaps impossible to maintain rules. Whereas, with a learning approach you can hope that at least a certain percentage of the time you get really the best prediction because that’s what you’ve tried to learn. So, there’s advantages and disadvantages.

DOI LINK TBC

Towards Biological Plausibility Using Linked Open Data – Dr Egon Willighagen

I study the role of machine representation of knowledge and hypothesis in life sciences, metabolomics, drug discovery, and toxicology, involving cheminformatics, chemometrics and semantic web technologies. In the past, I have applied research on this also to QSAR and crystallography. Open source programming and Open science is also my main hobby, resulting in participation in, amongst many others, Chemistry Development Kit, WikiPathways, Bioclipse, BridgeDb, and others.

Q & A

Q1: How can a database like ChEMBL be helpful to build our own ML system for drug discovery? To do that you need a defined threshold to differentiate your dataset between 2 states like active and inactive, but on ChEMBL you will get a collection of e.g different substrates which were obtained and published in different research labs?

I’ve just read the question about how to use ChEMBL, and the question where you put your threshold between active and inactive. We wrote an article some years ago about this and we did not use classification of active/inactive, but we made a regression model. This work was done in Uppsala where they had a good bit of experience with proteochemometrics already. Just classification is possible, but you can do a lot more with ChEMBL, and then you don’t have this problem of a threshold at all because you’re just making the regression. The semantic part is where we use information about the quality of the study. It’s a bit crude information in ChEMBL, but at the time they had a classification of 1 to 9, it wasn’t quite linear, but it at least gives some direction.

The effect of taking into account that assay confidence can be seen in figure 11.

Q2: Are you planning to use any SHACL in the development of your further ontologies or queries? And what do you think might be the advantages of that compared to the current method you’re doing with SPARQL?

SHACL is an alternative implementation of the idea of shape expressions. The short answer is no, because we use ShEx for that. But the same approach is quite comparable. They behave slightly differently, but if the question is are you using shape expressions? Yes, we are them for quality control where it represents the minimal amount of information that we need in the data source. Or as in our paper about the protocol for adding data to Wiki data where it is also about ensuring conversion went OK, so not the data itself is in the way we wanted but also that we can also use it to monitor the conversion process itself. So, shape expressions is definitely something that we are using and will continue to use.

Q3: You mentioned conversion, if you are converting data into linked data, what methods do you use?

A variety of things really; it depends on the format in which data comes. Personally, I’m quite fond of using the Groovy scripting language because it can handle XML quite well and with Bioclipse or the current version of that bacting. I have access to a number of other libraries that allow me to read Excel spreadsheets and Google spreadsheets. And the advantage of using the scripting languages that you’re not just doing the structure, reformatting, destruction, reorganization, but you can also do a bit of a data curation as you go, a couple of tables that normalize some labels into either a central type or in harmonized label. So, the script is a combination of the reformatting and automated curation. I do prefer to automate the curation and conversion as much as possible just to be able to not have to repeat the work when a new version of the input data comes. This automation process is something that I really quite cherish.

Q4: Definitely. I’ve done quite well with R2RML scripts and libraries are combined that with JavaScript for conversion, but that also took quite a while, but once you least once you had the script, you could then run them for new data.

Yeah, Ammar Ammar in our group, has been playing with RML a bit; so here the mapping is in a more formal mapping language that you can use to convert relational databases and other structured data formats into RDF.

Q5: My question is whether you think extension of pathways is something that we could apply my kind of learning approach too. So, having watched the talk before, because you’re obviously working a lot with pathway information, do you think there’s any applicability there?

Yes, I think so; There are a couple of interesting things happening. One bit of machine learning, well, the equivalent of text mining, is OCR on pathway diagrams. The team in San Francisco, Alex Pico’s team, have been using pathway OCR and this has given a website where they made this available and you can search there for gene names and you get pathways from figures from articles. Another thing that that is of interest is the work by Andra Waagmeester. He was also the one that created the first version of the Semantic Web representation of Wiki Pathways and a lot of the Wiki data work, and he has worked on a Pathway Loom and this has the point of extending pathways by using knowledge from other databases. So that could be partly look-up, but I can quite easily see how you include other information there. So, one thing where we sort of see this is protein interactions, where data can be based on a co-occurrence in an article in literature or based on computational protein interaction strength. Here, they already have that information cashed in protein interaction databases, so you don’t really have to include the machine learning directly into the pathway growing.

Second, we do classify our pathways with the pathway ontology and in our data analysis. Pathway similarity at an experimental data level, for example at a transcription level or at a proteomics level can be combined with ontological information in the Gene Ontology, the processes there. Laurent Winckers published an article where Gene Ontology information is used as a filter to not get this really huge hairball of data points but really a zoom-in on particular biological processes. I think there are some interesting links there with what you have been doing.